Trying to manage scattered business data and make it actionable. ETL pipeline development automates the extraction, transformation, and loading of data from multiple sources into a single, reliable system, helping businesses make smarter, faster decisions.

As companies grow, data is generated from CRMs, marketing tools, and operational databases, making quality and consistency a significant challenge. A robust ETL workflow for data engineering ensures data is cleansed and ready for analysis. With AI-enhanced ETL pipelines, organizations can now generate and update ETL logic in minutes, not days, reducing manual effort by up to 70%.

Modern businesses use cloud-based ETL services and automation tools to focus more on insights than data handling. By combining AI and automated ETL pipelines, companies can turn complex, multi-source data into actionable insights, improve decision-making, and build a strong foundation for growth.

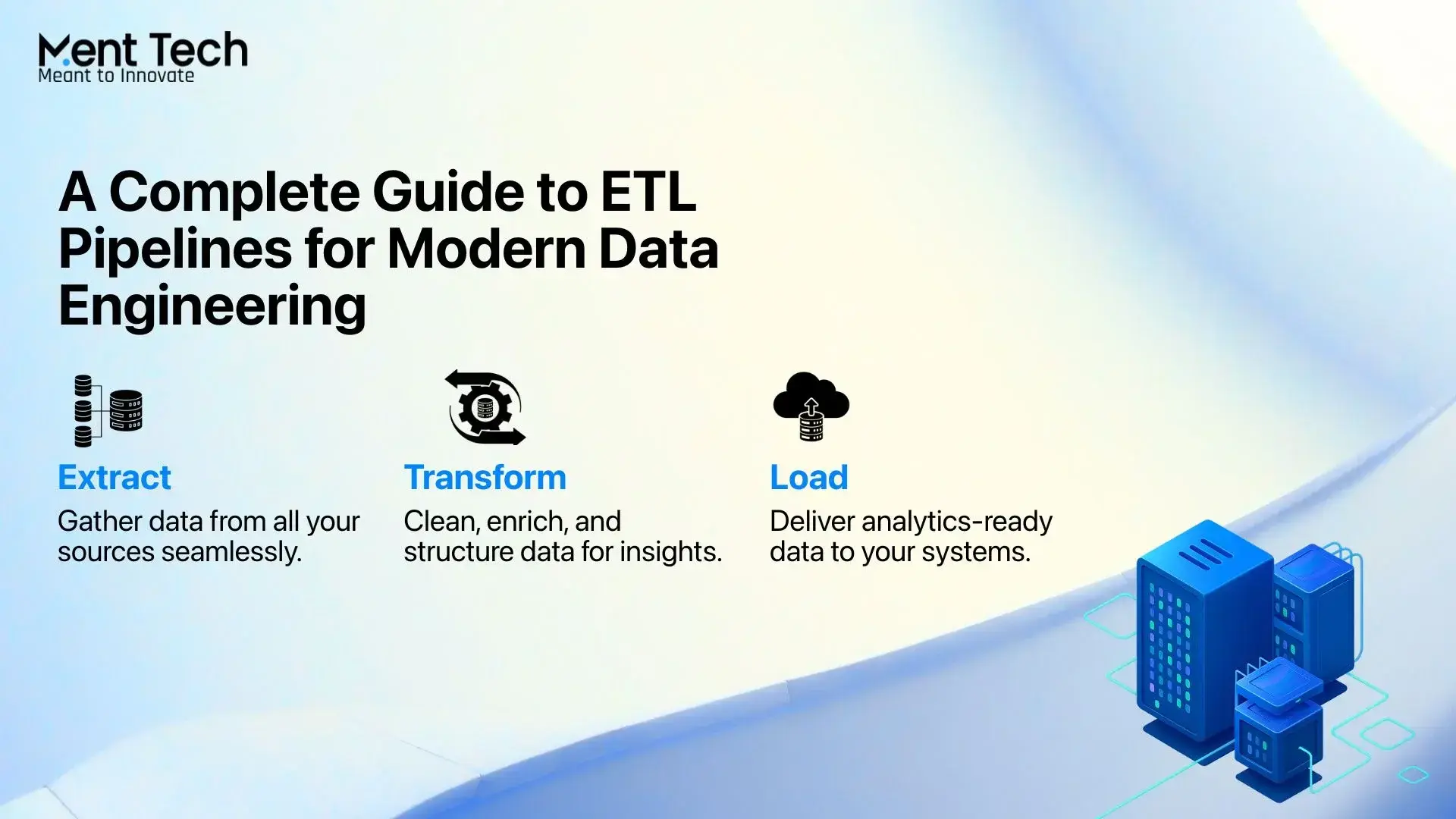

What is an ETL Pipeline?

An ETL pipeline automates the process of extracting, transforming, and loading data from multiple sources into a centralized system, like a cloud repository or data warehouse. This ensures clean, consistent, and ready-to-use data for AI, Web3, and blockchain applications, enabling faster insights and smarter decisions.

Modern pipelines leverage AI-powered automation to handle complex transformations, detect anomalies, and optimize workflows in real time. This allows businesses to focus on innovation and strategy, rather than manual data processing, making analytics, reporting, and decentralized applications more efficient and reliable.

Global 2025 reports reveal 63% of enterprises still cannot trust their data because of fragmented and patched pipelines. AI is amplifying this gap even faster. Every new model demands higher data reliability. ETL automation has officially shifted from backend tooling to a core business advantage, powering faster innovation, personalization, and measurable revenue outcomes.

Key Advantages of ETL Data Pipelines

ETL data pipelines automate the extraction, transformation, and loading of data from multiple sources into a centralized system. They ensure high-quality, consistent, and actionable data, enabling faster insights, better analytics, and smarter business decisions.

1. Improved Data Quality

ETL pipelines clean, validate, and transform data to ensure accuracy, consistency, and reliability, helping AI models and analytics generate actionable insights without errors or inconsistencies.

2. Enhanced Data Integration

They consolidate data from multiple sources, including databases, APIs, and blockchain systems, creating a unified view of operations and supporting seamless ETL data pipeline development for better decision-making.

3. Faster Time to Insights

Automated workflows ensure that data is immediately ready for analysis, accelerating reporting, dashboards, and predictive models while enabling teams to act on critical opportunities faster.

4. Scalability for Growing Data

Modern ETL pipelines handle growing volumes of structured and unstructured data from cloud, Web3, or AI applications, making them ideal for scalable solutions integrated with MLOps & AI Infrastructure.

5. Operational Efficiency

Automation reduces manual effort and human errors, streamlining repetitive tasks and freeing teams to focus on innovation, strategic planning, and value-added initiatives across the organization.

6. Support for Advanced Analytics

Clean, structured, and centralized data is ready for AI, machine learning, and decentralized application analytics, enabling predictive insights, anomaly detection, and smarter business strategies.

7. Regulatory Compliance and Security

ETL pipelines enforce encryption, access controls, and governance rules, ensuring sensitive data is secure and compliant with GDPR, HIPAA, and other regulatory requirements while maintaining operational transparency.

ETL Pipeline vs Data Pipeline

A data pipeline moves data between systems, while an ETL pipeline also transforms it for analytics and reporting, helping businesses use data effectively.

Data Pipeline

A data pipeline is any system that automatically moves data from one location to another. It can be as simple as syncing records between databases or as complex as managing real-time streaming, alerts, or workflows across cloud platforms. Some pipelines transfer data as-is, prioritizing speed and reliability, using methods like batch processing, streaming, ELT, or replication.

ETL Pipeline

An ETL pipeline is a specialized type of data pipeline that not only moves data but also transforms it. It extracts data from multiple sources, cleans, reshapes, and enriches it, then loads it into structured destinations such as data warehouses. The transformation step ensures that the data is accurate, consistent, and ready for analytics, reporting, or dashboards, and supports processes like AI for KYC.

Key Differences

| Aspect | Data Pipeline | ETL Pipeline |

| Scope | Broad systems focused on moving data from one place to another with minimal processing involved. | Narrow and more process-driven, always following the Extract → Transform → Load model. |

| Processing | May transfer raw or lightly processed data; can work in batch mode or real-time streaming scenarios. | Always includes data transformation before loading, usually optimized for structured analytics use cases. |

| Use Cases | Ideal for data replication, streaming ingestion, event processing, IoT data movement, or syncing multiple systems. | Designed for analytics, BI dashboards, reporting, and preparing datasets for data warehouses. |

| Complexity | It can be simple or advanced, depending on the architecture and business requirements. | Usually more complex due to transformation logic, data quality rules, cleaning, and enrichment steps. |

| Destination | Can deliver data to any endpoint like databases, data lakes, APIs, operational systems, apps, etc. | Mostly targets structured destinations such as data warehouses where analytics-ready data is required. |

| Tools & Technologies | Uses specialized ETL tools like Talend, AWS Glue, Informatica, or Pentaho for structured workflows | Can use stream processing frameworks (Apache Kafka, Apache Flink), orchestration tools (Airflow), or custom scripts |

| Timing / Latency | Often batch-oriented; real-time ETL is emerging for low-latency analytics | Flexible timing: batch, real-time, or hybrid depending on business needs |

| Data Quality & Transformation | validation, enrichment, and transformation to ensure analytics-ready data | May include minimal or no transformation; focuses on moving raw or lightly processed data |

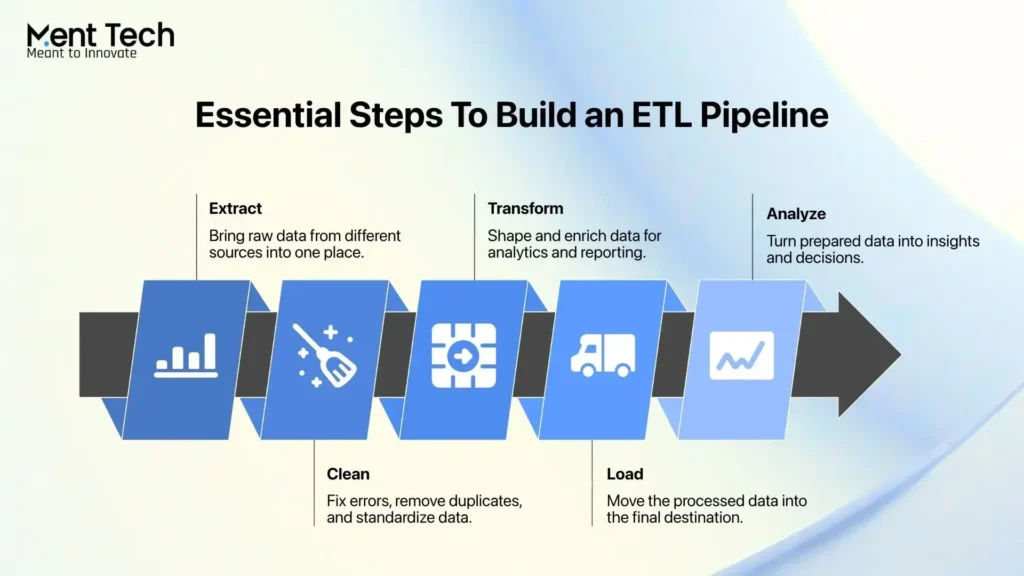

Essential Steps To Build an ETL Pipeline

Building an ETL pipeline follows a structured flow where data is first extracted from multiple sources, then cleaned, validated, and transformed as per business rules. Finally, the refined data is loaded into a target storage system, enabling accurate analytics, reporting, and decision-making across the organization.

1. Extract

In this stage, data is collected from various systems such as databases, APIs, CRMs, logs, and flat files. The goal is to bring raw data into a staging environment for initial checks. This sets the base for ETL pipeline development and ensures the incoming data is reliable before moving forward.

2. Clean

Cleaning removes duplicates, incorrect entries, formatting issues, and inconsistencies. This step improves data accuracy and prevents errors from spreading across the pipeline. Clean and standardized data directly contributes to better Machine-learning Development and analytics outcomes.

3. Transform

Here, data is reshaped, enriched, and structured for analysis. Business rules ensure it aligns with reporting and data warehousing needs, making it insight-ready while complying with Responsible AI & Governance standards.

4. Load

In this step, the processed data is loaded into the target destination, usually a data warehouse or analytics platform. Loading may be full load or incremental, based on changes and volume. Automated load ensures consistency and accessibility for analytics teams.

5. Analyze

Once loaded, teams can now query, visualize, and derive insights to support decisions. Analysis unlocks the value of ETL Pipeline development, turning prepared data into business intelligence, KPIs, forecasting, and operational optimization.

Types of Data Pipelines Used in Real-world Implementations

Different types of data pipelines are used based on how businesses need to move, process, and analyze data. Each model supports specific workloads and ensures data flows efficiently for analytics, operations, and decision-making.

1. Batch Processing Pipelines

Batch pipelines collect and process data in large groups at fixed schedules rather than continuously. Ideal when speed is not critical and datasets are huge + complex.

Key Pointers:

- Best for periodic historical analysis

- Reduces compute cost across ETL pipeline workflows

- Works well with daily/weekly reporting cycles

2. Real-Time Processing Pipelines

Real-time pipelines process data instantly as it arrives, enabling organizations to react immediately to changes or patterns. This supports faster and proactive decision making.

Key Pointers:

- Suitable for fraud detection, alerts, social feeds

- Continuous event monitoring

- Enables instant business actions

3. Data Streaming Pipelines

Streaming pipelines (event-driven) handle continuous data flows from sensors, mobile apps, IoT devices, and user interactions in motion. Best suited for near real-time analytics.

Key Pointers:

- Continuous ingestion + transformation

- Ideal for IoT pipelines and log analytics

- Supports low-latency decision cycles

4. Data Integration Pipelines

Integration pipelines combine data from multiple systems into a unified, consistent, and analytics-ready structure. This aligns both Data Pipeline and ETL pipeline architectures.

Key Pointers:

- Cleans + enriches raw fragmented data

- Converts formats into unified structures

- Makes data reliable for BI, dashboards & reporting

Real-World ETL Pipeline Use Cases Transforming Modern Businesses

Modern organizations use ETL pipelines to automate data movement, improve analytics accuracy, and drive more informed decision-making. With efficient ETL pipeline development, businesses convert raw data into high-impact insights faster and at scale.

1) Business Intelligence & Reporting

ETL workflows consolidate departmental data into centralized dashboards and KPIs, ensuring leaders act on trusted data while enabling AI Voice Agent systems for intelligent, data-driven interactions.

2) CRM & Customer Insights

ETL pipelines merge customer data from apps, websites, billing, and support touchpoints into a unified CRM, enabling customer 360, churn prediction, and targeted engagement, while supporting Web3 Wallet Development initiatives.

3) Machine Learning & Data Science Prep

ETL automation speeds up feature preparation, normalization, and transformation work so data scientists don’t waste time cleaning data manually. This accelerates model development and real-world ML deployment.

4) Fraud Detection & Risk Monitoring

Financial apps and banks rely on ETL pipelines to enrich transactions with historical patterns and feed anomaly detection models. This reduces fraud exposure and supports real-time prevention.

5) E-commerce Personalization & Recommendations

ETL pipelines combine product data, purchase logs, user behavior, and pricing signals to power recommendation engines, boosting conversions, average cart value, and customer lifetime value.

6) IoT Sensor & Telemetry Processing

IoT systems generate high-velocity continuous data. ETL pipelines help aggregate, filter, and format sensor streams for anomaly detection, predictive maintenance, and operational optimization.

7) Data Migration & Application Modernization

During cloud adoption or infrastructure upgrades, ETL pipelines ensure structured, clean, and reliable movement of legacy data, simplifying modernization and reducing migration failures across systems.

Future of ETL and Data Pipeline Development

ETL and data pipeline development is evolving toward real-time, automated, and AI-driven workflows, enabling faster, smarter, and more scalable data integration and analytics.

• Real-Time & Streaming ETL

Organizations are moving from traditional batch processes to real-time data integration, enabling instant access to critical insights. Stream-based ETL pipelines powered by tools like Apache Kafka and Flink ensure timely analytics and rapid decision-making.

• Cloud-Native ETL Solutions

Cloud-based ETL services offer elasticity, reduced infrastructure costs, and simplified maintenance. Platforms like AWS Glue, Google Cloud Dataflow, and Azure Data Factory are redefining how cloud-based ETL services support scalable and enterprise-grade pipelines.

• AI & Machine Learning-Enhanced ETL

Intelligent ETL pipelines leverage AI and ML to automate data quality checks, detect anomalies, optimize performance, and even suggest transformations. This trend is transforming traditional ETL pipeline development processes into self-optimizing systems.

• Self-Service ETL for Business Users

Modern ETL tools empower non-technical users to design, manage, and deploy pipelines independently. Automated ETL pipelines for enterprises are becoming a key enabler of data democratization and faster business insights.

• Metadata-Driven & Dynamic Pipelines

Metadata-driven ETL dynamically generates workflows based on metadata, reducing manual configuration and improving adaptability. This approach supports agile ETL workflow for data engineering, simplifying maintenance and scaling for complex data environments.

Conclusion

ETL pipelines are reshaping how companies turn raw information into usable, actionable intelligence. This evolution isn’t just about processing data faster. It’s about empowering smarter decisions, stronger analytics outcomes, and scalable data operations in an increasingly AI-focused future.

Ment Tech, a leading Data Engineering & ETL development company, builds secure, optimized, and high-performing ETL pipelines tailored for modern analytics ecosystems. From data warehouse modernization to ML-ready processing, enrichment, and real-time transformation, we help organizations unlock true value from data.

Contact us today to discuss how we can help transform your ETL vision into a scalable, intelligent data foundation.

FAQs

ETL pipelines are built to make data usable for business decisions. The purpose is to convert raw scattered data into analytics-ready ready consistent, and trusted data that fuels BI dashboards, predictive models, and revenue growth.

A data pipeline only moves or syncs data from one place to another. ETL pipelines actually transform, clean, normalize, and enrich the data, which makes them more valuable for analytics reporting governance, and enterprise intelligence.

Earlier, ETL was mostly batch processing. Now modern ETL can be real-time streaming and micro batch using Kafka, Flink, Snowflake, Fivetran, dbt, and cloud native ETL tools used by modern data engineering teams and Machine Learning development workflows.

A typical modern stack includes dbt for transformations, Airflow or Dagster for orchestration, Snowflake BigQuery or Redshift as a warehouse, and Kafka for ingestion. Cloud ETL services like AWS Glue further simplify management and scale.

Choose ELT if your warehouse has high compute and you prefer doing transformations inside the warehouse. Choose ETL if you need strict transformation rules before loading or when dealing with legacy data systems and compliance-heavy workflows.

Simple to mid-size solutions take around 1 to 4 weeks, and enterprise multi-source ETL takes 6 to 12 weeks. The major time goes into schema definition, lineage data quality, and transformation logic, not coding, and this applies even in Smart Contract Development data flows.