Apple’s delay in upgrading Siri has thrown a spotlight on its AI shortcomings and raised questions about its next big move. The postponed Siri AI improvements, once expected to launch in 2025, promised upgraded Siri features like smarter app control and deeper contextual understanding. This setback underscores Apple’s cautious approach and fuels debate over how it will keep pace with rivals moving faster in voice assistant innovation.

Behind the scenes, Apple is working on more than just visible updates. Reports suggest a complete overhaul of Siri’s infrastructure to make it more reliable, secure, and capable of handling high-stakes tasks without error.

While the delay may frustrate users, it hints at a bigger goal: transforming Apple’s AI-powered Siri into a truly intelligent, voice-first assistant that seamlessly integrates with both Apple and third-party apps. If successful, these AI improvements to Siri could redefine how millions interact with their devices.

Background on Apple Intelligence & Siri Updates

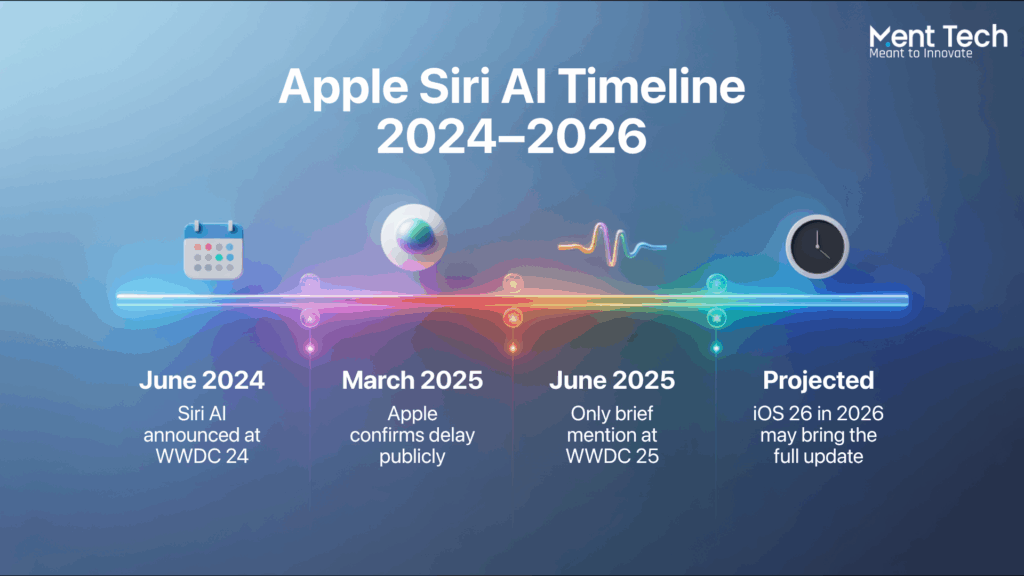

Apple first unveiled Apple Intelligence at WWDC 2024, showcasing a significantly upgraded Siri with contextual understanding, deeper app integrations, and advanced generative AI capabilities. The rollout was initially scheduled to begin in late 2024 with the release of iOS 18.

However, several flagship features, such as enhanced personal context recognition and improved app control, have now been postponed to spring 2026. This unexpected delay has sparked discussions in the tech community, especially among AI as a Service companies and developers closely tracking Apple’s AI roadmap

Key Reasons for the Delays

1. Technical Challenges

Apple’s insistence on on-device AI processing to safeguard user privacy has introduced significant engineering hurdles. Many of these advanced AI functions require high computational power, which creates tension between privacy goals and performance needs. Testing revealed inconsistent results, particularly in handling extended contextual AI queries, executing voice commands seamlessly, and maintaining low latency with minimal memory consumption.

Additionally, security concerns such as potential vulnerabilities from prompt injection attacks have driven Apple to enforce exceptionally high quality and safety benchmarks before public release.

2. Organizational Factors

Internally, the Siri team has faced coordination gaps between AI research units and core software development teams. Leadership instability within the Siri division has also played a role, compounded by the departure of around a dozen AI specialists to competitors like Meta and OpenAI. The excitement generated by Apple’s WWDC 2024 announcements set user expectations that some features were not yet prepared to meet.

3. Strategic Approach

Apple’s leadership continues to favor a “quality-first” approach, prioritizing reliability over rapid deployment. While this strategy is consistent with Apple’s long-standing development philosophy, critics argue that it risks slowing innovation in a sector where competitors, including AI trading companies, are moving at a much faster pace.

What Apple’s Siri Delay Means for the AI Industry

Apple announced the AI-powered version of Siri more than a year ago, promising users a smarter, more context-aware assistant that could take action inside apps and understand personal relationships, messages, and habits.

But at WWDC 25, Apple confirmed the update is delayed until at least 2026. According to Bloomberg, internal testing showed Siri’s AI features failed one-third of the time, a failure rate too risky to ship to millions of users on iOS 26.

For the broader AI industry, this delayed Siri AI upgrade is telling. In a time where OpenAI updates models quarterly and AI startups launch new products almost every week, Apple’s decision highlights a key vulnerability: big tech moves slow, and the bar for quality is high.

AI startup opportunities in 2025 are no longer about pushing out demos; they are about shipping reliable, responsive tools that can live inside messaging apps, mobile devices, or custom workflows. A delay from Apple gives smaller teams a clear path to build smarter alternatives that move faster and serve users better.

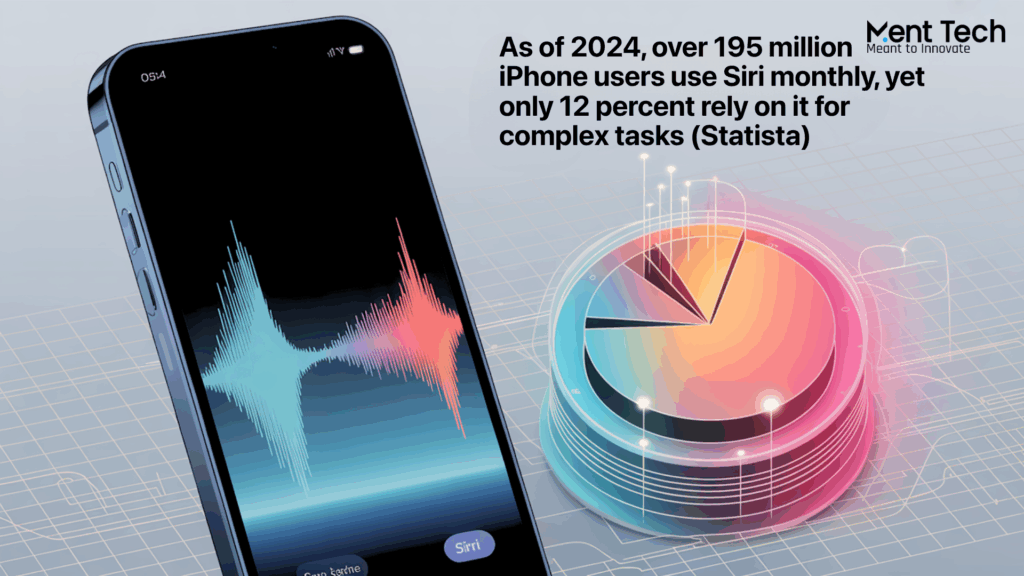

As of 2024, over 195 million iPhone users use Siri monthly, yet only 12 percent rely on it for complex tasks (Statista)

Why AI Assistant Development Is Now a Strategic Advantage

The world is moving toward voice-first interaction. Consumers are no longer impressed by generic responses or basic commands. They expect smart assistants that can book flights, summarize emails, manage tasks, automate workflows, and even support specialized needs like AI for KYC in real time.

This is where AI assistant development becomes critical. Startups that specialize in building personalized, context-aware voice tools are no longer just building features. They are solving real user problems

Take tools like Replika, Pi, and Hugging Face’s Assistant. These products are examples of what Siri still struggles to deliver flexibility, emotional tone, memory, and multi-modal input. A custom voice assistant can now combine LLMs, on-device models, and app integrations to serve a wider range of tasks

The AI virtual assistant market is expected to grow to $46 billion by 2027, with mobile and app-based assistants leading adoption. Startups that move fast with full-stack AI chatbot development services and support for on-device AI assistant architecture are better positioned than ever.

How Startups Can Build a Better Siri in 2025?

With Apple delaying the release of its advanced voice assistant, AI startups have a unique opportunity to capture market share, attract investor attention, and lead the future of personal AI tools. Here’s how startups can build smarter, faster, and more useful Siri alternatives in 2025.

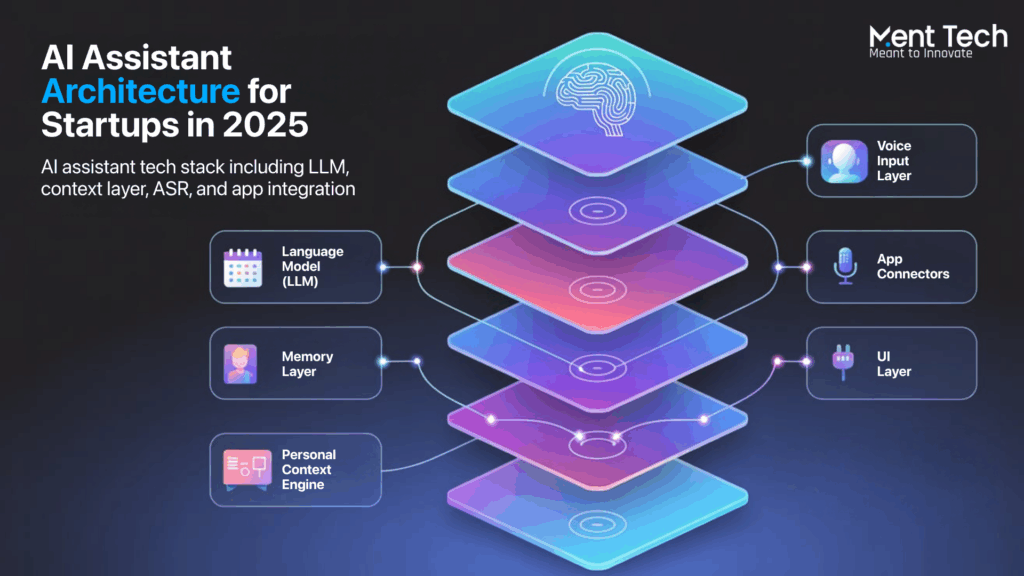

1. Choose the Right AI Assistant Development Stack

Use open-source LLMs or fine-tuned models. Startups can now access powerful large language models like Mistral, Claude, or LLaMA to build conversational capabilities into their AI assistants. The right foundation enables accurate, real-time conversations.

Integrate context-aware memory layers. Use AI memory systems that retain user preferences, past commands, and behavioral patterns. This creates the foundation for a truly personalized Siri alternative that improves over time.

2. Build for Personal Context and Workflow Automation

Understand personal context across apps. Build a system that knows who the user is, what they do, and how they interact with other tools. AI assistants in 2025 must integrate personal data to offer relevant actions.

Trigger cross-platform actions. Design the assistant to open apps, manage workflows, and complete tasks like sending messages, creating events, or managing files without switching apps.

3. Enable Seamless Voice Interactions

Leverage custom voice assistant integration. Use tools like Whisper, Deepgram, or your own ASR to deliver fast and accurate speech recognition. The result is an on-device AI assistant that feels responsive and human.

Deploy across mobile, browser, and desktop. Build the assistant to function across all major environments: iOS, Android, desktop, and web. This multiplatform reach ensures users can access help whenever and wherever they need.

4. Deliver a Branded AI Agent Experience

Use custom UX and branded personalities. The design of your AI assistant should reflect your brand. Add visual identity, tone of voice, and user interface components that align with your company’s values.

Offer AI chatbot development services for your users. If your SaaS or product platform can embed AI assistants for its own users, consider offering assistant-as-a-service to create new revenue and adoption streams.

5. Partner With AI Agent Development Experts

Build with companies that understand AI-first products. Ment Tech Labs works directly with AI startups and enterprises to deliver high-performance AI assistants. From UX planning and API architecture to product deployment and on-device optimization, we support every layer of the stack.

Accelerate launch timelines with real experience. Our team has worked on 100+ AI agent deployments across sectors, leveraging experience across top AI platforms to move fast and build right. We know how to take a concept from idea to MVP and scale to global users without unnecessary delays or complexity.

What Startups Can Learn From Apple’s Siri Struggles

Apple’s delay in shipping its next-generation AI assistant is more than just a product hiccup, it’s a case study in how even tech giants can miscalculate what users actually need from voice-based AI.

Here’s what AI startups can extract from Apple’s experience:

1. Precision Over Hype Wins Every Time

Siri’s underperformance, where it reportedly worked correctly only two-thirds of the time, shows that incomplete AI deployment can do more harm than good. Startups offering adaptive AI solutions should prioritize reliability, context-awareness, and user control over flashy features.

2. The Market Wants On-Device AI Assistants

Apple’s push for on-device AI models and context-driven assistants reflects a broader trend: people want privacy-first, responsive voice AI that works offline. Startups focusing on lightweight models optimized for mobile or edge devices are more likely to capture this demand.

3. Multi-App Interoperability is Now Expected

Siri’s promise of cross-app actions is what users want, but startups can actually ship it faster. If your AI assistant can handle task routing, integrations, and contextual commands across apps like WhatsApp, Google Calendar, and Notion, you’re already ahead.

4. Speed of Execution Matters More Than Brand Trust

Apple delayed Siri’s launch to meet its “quality bar,” but in fast-moving AI markets, speed plus stability beats waiting years for perfection. Partnering with an experienced AI development company can help startups release minimum viable AI assistants that get smarter over time while maintaining reliability.

How to Build Your Own Voice AI with Open Tools

Building a powerful, Siri-like voice assistant no longer requires massive budgets or proprietary tech. With the right open-source tools and frameworks, startups can create fast, privacy-friendly, and fully customizable AI assistants. Here’s the process:

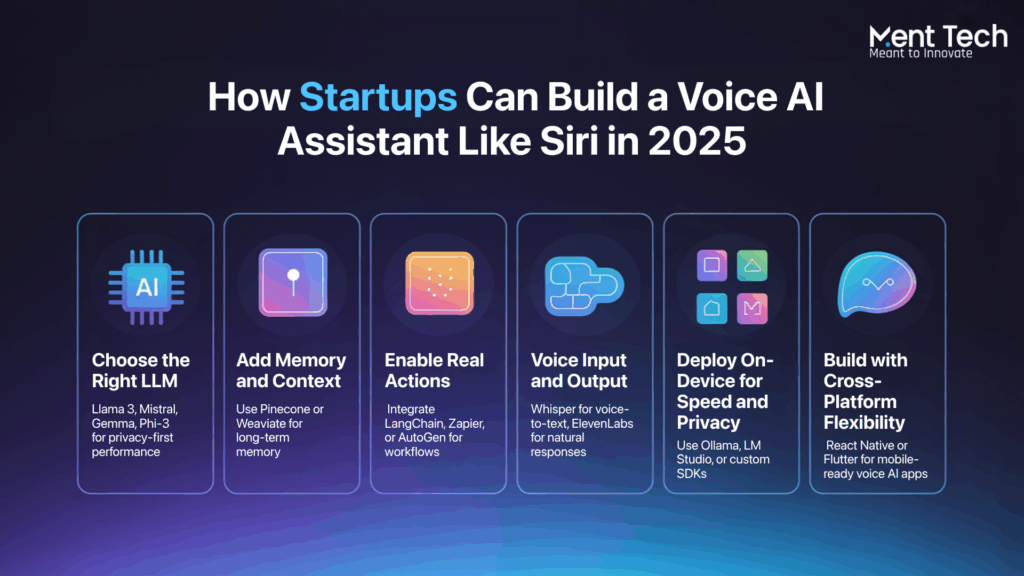

1. Choose the Right LLM

Select an open-source large language model that balances performance, cost, and privacy. Options like LLaMA 3, Mistral, Gemma, or Phi-3 offer strong conversational abilities and can run on-device for better security. Your choice depends on whether you prioritize speed, accuracy, or multilingual support.

2. Add Memory and Context

A great voice AI remembers past interactions. Use vector databases like Pinecone or Weaviate to store user preferences, conversation history, and context. This “long-term memory” enables more personalized, human-like conversations over time.

3. Enable Real Actions

Integrate workflow automation tools such as LangChain, Zapier, or AutoGen within an AI agent framework to make your assistant truly useful. This allows it to perform real-world actions like sending messages, scheduling meetings, or controlling smart devices, not just chatting.

4. Implement Voice Input and Output

For natural voice interactions, pair your LLM with speech-to-text and text-to-speech tools. Whisper by OpenAI or Deepgram can handle voice input, while ElevenLabs or Coqui TTS generate realistic voice responses.

5. Deploy On-Device for Speed and Privacy

Run your AI locally on devices using tools like Ollama, LM Studio, or custom SDKs. On-device AI ensures faster responses, works offline, and keeps sensitive data private.

6. Build with Cross-Platform Flexibility

Make your assistant available everywhere: mobile, web, and desktop. Use frameworks like React Native or Flutter to create cross-platform apps that work seamlessly across iOS, Android, and more.

Real-World Startup Use Cases of Voice AI in 2025

From fintech to productivity, startups across industries are embedding voice AI in smart, surprising ways. Here are some standout examples to inspire your next move:

1. Customer support for fintech

A UAE-based fintech app reduced response time by 60 percent by deploying a multilingual AI voice assistant trained on past support calls and FAQs.

2. Smart voice scheduling for freelancers

A productivity tool in Singapore introduced a calendar assistant that reschedules meetings and provides voice summaries of upcoming tasks through WhatsApp.

3. Voice command in healthcare dashboards

A telemedicine platform in Europe added voice navigation for doctors to pull up patient histories hands-free, saving time during remote consultations.

4. Web3 portfolio tracker with voice

A DeFi portfolio tool enabled wallet queries via voice prompts, allowing users to track yield farming and staking performance on the go.

5. In-app AI tutors for edtech

An edtech app in India launched voice-powered revision tutors that quiz students verbally and respond using contextual feedback. These startups show that voice AI in 2025 is more than novelty and it is increasingly a product advantage.

Why This Moment Matters for Voice AI Startups

Apple’s delay is not just a product update pushed back. It is a clear signal that even the biggest tech companies are struggling to move fast in a fast-moving AI world. For startups, this is not a setback, it is an open door.

The demand for intelligent, personalized voice assistants is growing every day. From trading terminals and healthcare apps to productivity tools, AI journal assistants, and smart devices, users want voice interfaces that truly understand them and get things done.

Startups have a unique edge. You can build with flexibility, iterate fast, and solve for specific use cases that big platforms cannot reach. Whether it is real-time market insight, contextual assistance, or seamless app control, the opportunity is clear.

At Ment Tech Labs, we work with builders who want to lead this shift. Our team helps you turn a voice interface into a product users trust, using the best of open-source models, secure infrastructure, and human-first design.

The next Siri will not come from Apple. It might just come from your team.

Final Thoughts

While Apple’s delay in Siri upgrades has brought its AI shortcomings into the spotlight, it’s clear that the real game is being played behind closed doors. As an AI development company, Apple’s internal restructuring, AI talent acquisitions, and potential partnerships point toward a deeper, strategic play, one that could reshape how voice assistants understand context, personalize interactions, and integrate seamlessly into daily life. Sustainable AI success comes from long-term planning and flawless execution, not rushed features.

At MentTech, we share that vision. Our Voice AI and Adaptive AI Solutions focus on building assistants that are intelligent, reliable, and context-aware. We help businesses avoid incomplete deployment pitfalls, ensuring every AI solution is both innovative and practical. The future of AI will belong to those who combine ambition with precision get started today and turn your AI vision into reality.

FAQ

1. What does the Siri delay mean for voice AI startups in 2025?

Siri’s limited upgrades have opened a huge opportunity for startups to launch custom voice AI assistants that are faster, more flexible, and built on advanced LLMs. Companies like Ment Tech Labs help businesses capitalize on this gap with white-label AI assistant platforms.

2. How can startups build their own voice AI like Siri?

Startups can work with an AI development company to build voice assistants using LLMs, real-time transcription, and voice synthesis. At Ment Tech Labs, we offer full-stack development, from backend to UI, tailored to specific business use cases.

3. What are the benefits of launching a custom AI voice assistant?

Launching your own assistant gives you full control over branding, data, and user experience. Unlike Siri or Alexa, custom-built voice AIs can be fine-tuned for finance, trading, support, and automation with your business logic.

4. How fast can a business go from idea to a voice-ready AI product?

With our pre-trained models and integration stack, Ment Tech Labs helps startups go live with a branded voice AI assistant in just a few weeks — ready for real users, monetization, and automation from Day 1.

5. What technologies power a modern AI voice assistant in 2025?

Today’s assistants are powered by large language models (LLMs), speech-to-text, text-to-speech, vector databases, and agentic workflows. We combine these technologies to deliver conversational accuracy and real-time response speeds.

6. Who needs a custom voice AI assistant today?

Trading platforms, customer support teams, fintech apps, edtech platforms, and Web3 products all benefit from intelligent voice assistants that automate repetitive queries and enhance user experience.

7. What services does Ment Tech Labs offer for voice AI development?

Ment Tech Labs provides end-to-end voice AI development — including assistant design, LLM integration, deployment infrastructure, custom UI/UX, and branded white-label platforms for businesses that want to launch fast.